Facebook has long been known for its social media site where people can communicate with friends and family. But recently, Facebook has been increasing its focus on mental health care by introducing a new AI technology that will find and prevent Facebook users who think they may commit suicide through mutual comments.

Background of Facebook’s AI Suicide Prevention

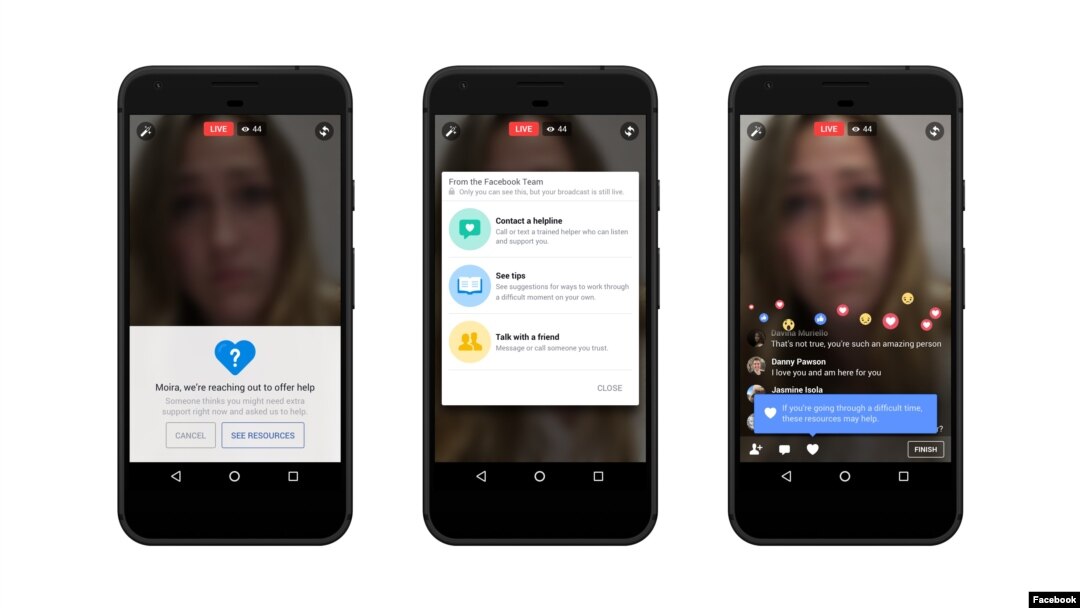

Facebook is using a new Artificial Intelligence (AI) technology that will find and prevent Facebook users who think they may commit suicide through mutual comments. The AI uses a ‘machine learning’ algorithm to screen for posts that could be harmful or suicidal in nature. The project is still in its early stages, but Facebook hopes it can help reduce the number of suicides on the social media site.

Facebook has long been aware of the risk of suicide on its platform, and has taken various measures to prevent it. In 2017, the company launched Watch, an AI-powered feature that monitors posts for any signs of harm or suicide. This includes looking for posts that mention specific times or days, as well as words or phrases that are often associated with suicide. If a post is flagged, Facebook will send a message to the user and their friends to warn them about potential danger.

How Facebook’s AI Technology Works

Facebook is starting to use a new Artificial Intelligence (AI) technology that will find and prevent Facebook users who think they may commit suicide through mutual comments. The AI technology, called “Prevention Tool,” is designed to identify any potential suicides in a comment thread and then order the threads so that the suicidal user does not see them. Facebook has been testing this technology for the past few months on a small scale, but plans to roll it out globally in the coming weeks.

The reasoning behind this move is simple: if Facebook can prevent suicides from happening, it will save lives. This is especially important given that suicide is the tenth leading cause of death worldwide. In fact, suicide rates have been on the rise for some time now, and there is no indication that this trend will reverse any time soon.

So what exactly does Prevention Tool do? Essentially, it uses a variety of machine learning algorithms to identify patterns in comments that could indicate a suicidal ideation or intent. Once it detects a possible suicide risk, Prevention Tool orders all subsequent comments so that the suicidal user doesn’t see them. This way, they don’t have an opportunity to act on their thoughts in a public forum and potentially harm themselves or someone else

How Facebook Uses AI to Prevent Suicide

Facebook is using a new AI technology that will find and prevent Facebook users who think they may commit suicide through mutual comments. The AI will scan through all of the comments to see if any mention suicide, and if it finds any, it will send a warning to the user and their friends. This new technology is in place to help prevent suicide on Facebook, and it’s working well so far.

Conclusion

Facebook recently rolled out a new AI technology designed to identify and prevent users from discussing suicide on their social media profiles. This new system is meant to protect Facebook users from feeling uncomfortable or unsafe, and it will use machine learning algorithms to identify potentially suicidal comments. If detected, the AI system will alert the moderators of the user’s Facebook account for further review. Although this may seem like a positive step in the fight against suicide, there are some concerns that this could lead to censorship and suppression of free speech.